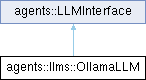

Implementation of LLMInterface for Ollama models. More...

#include <ollama_llm.h>

Public Member Functions | |

| OllamaLLM (const std::string &api_key, const std::string &model="llama3") | |

| Constructor. | |

| ~OllamaLLM () override=default | |

| Destructor. | |

| std::vector< std::string > | getAvailableModels () override |

| Get available models from Ollama. | |

| void | setModel (const std::string &model) override |

| Set the model to use. | |

| std::string | getModel () const override |

| Get current model. | |

| void | setApiKey (const std::string &api_key) override |

| Set API key (not used for Ollama, but implemented for interface compliance). | |

| void | setApiBase (const std::string &api_base) override |

| Set API base URL for Ollama server. | |

| void | setOptions (const LLMOptions &options) override |

| Set options for API calls. | |

| LLMOptions | getOptions () const override |

| Get current options. | |

| LLMResponse | chat (const std::string &prompt) override |

| Generate completion from a prompt. | |

| LLMResponse | chat (const std::vector< Message > &messages) override |

| Generate completion from a list of messages. | |

| LLMResponse | chatWithTools (const std::vector< Message > &messages, const std::vector< std::shared_ptr< Tool > > &tools) override |

| Generate completion with available tools. | |

| void | streamChat (const std::vector< Message > &messages, std::function< void(const std::string &, bool)> callback) override |

| Stream results with callback. | |

| AsyncGenerator< std::string > | streamChatAsync (const std::vector< Message > &messages, const std::vector< std::shared_ptr< Tool > > &tools) override |

| Stream results asynchronously. | |

| AsyncGenerator< std::pair< std::string, ToolCalls > > | streamChatAsyncWithTools (const std::vector< Message > &messages, const std::vector< std::shared_ptr< Tool > > &tools) override |

| Stream chat with tools asynchronously. | |

| virtual Task< LLMResponse > | chatAsync (const std::vector< Message > &messages) |

| Async chat from a list of messages. | |

| virtual Task< LLMResponse > | chatWithToolsAsync (const std::vector< Message > &messages, const std::vector< std::shared_ptr< Tool > > &tools) |

| Async chat with tools. | |

| virtual std::optional< JsonObject > | uploadMediaFile (const std::string &local_path, const std::string &mime, const std::string &binary="") |

| Provider-optional: Upload a local media file to the provider's file storage and return a canonical media envelope (e.g., with fileUri). Default: not supported. | |

Detailed Description

Implementation of LLMInterface for Ollama models.

Constructor & Destructor Documentation

◆ OllamaLLM()

| agents::llms::OllamaLLM::OllamaLLM | ( | const std::string & | api_key, |

| const std::string & | model = "llama3" ) |

Constructor.

- Parameters

-

api_key The API key model The model to use

Member Function Documentation

◆ chat() [1/2]

|

overridevirtual |

Generate completion from a prompt.

- Parameters

-

prompt The prompt

- Returns

- The completion

Implements agents::LLMInterface.

◆ chat() [2/2]

|

overridevirtual |

Generate completion from a list of messages.

- Parameters

-

messages The messages

- Returns

- The completion

Implements agents::LLMInterface.

◆ chatAsync()

|

virtualinherited |

Async chat from a list of messages.

- Parameters

-

messages The messages to generate completion from

- Returns

- The LLM response

◆ chatWithTools()

|

overridevirtual |

Generate completion with available tools.

- Parameters

-

messages The messages tools The tools

- Returns

- The completion

Implements agents::LLMInterface.

◆ chatWithToolsAsync()

|

virtualinherited |

Async chat with tools.

- Parameters

-

messages The messages to generate completion from tools The tools to use

- Returns

- The LLM response

◆ getAvailableModels()

|

overridevirtual |

◆ getModel()

|

overridevirtual |

◆ getOptions()

|

overridevirtual |

◆ setApiBase()

|

overridevirtual |

Set API base URL for Ollama server.

- Parameters

-

api_base The API base URL

Implements agents::LLMInterface.

◆ setApiKey()

|

overridevirtual |

Set API key (not used for Ollama, but implemented for interface compliance).

- Parameters

-

api_key The API key

Implements agents::LLMInterface.

◆ setModel()

|

overridevirtual |

◆ setOptions()

|

overridevirtual |

◆ streamChat()

|

overridevirtual |

Stream results with callback.

- Parameters

-

messages The messages callback The callback

Implements agents::LLMInterface.

◆ streamChatAsync()

|

overridevirtual |

Stream results asynchronously.

- Parameters

-

messages The messages tools The tools

- Returns

- AsyncGenerator of response chunks

Reimplemented from agents::LLMInterface.

◆ streamChatAsyncWithTools()

|

overridevirtual |

Stream chat with tools asynchronously.

- Parameters

-

messages The messages tools The tools

- Returns

- AsyncGenerator of response chunks and possible toolcalls

Reimplemented from agents::LLMInterface.

◆ uploadMediaFile()

|

virtualinherited |

Provider-optional: Upload a local media file to the provider's file storage and return a canonical media envelope (e.g., with fileUri). Default: not supported.

- Parameters

-

local_path Local filesystem path mime The MIME type of the media file binary Optional binary content of the media file

- Returns

- Optional envelope; std::nullopt if unsupported

Reimplemented in agents::llms::AnthropicLLM, and agents::llms::GoogleLLM.